TL:DR summary

Pros:

- Scans very large documents

- Easy to use

- Packs away in a convenient carrying case

Cons:

- So-so color fidelity

- Hard to feed artwork straight

- Dust and debris can easily get on the platen, ruining scans

- Relatively expensive for home use

Review

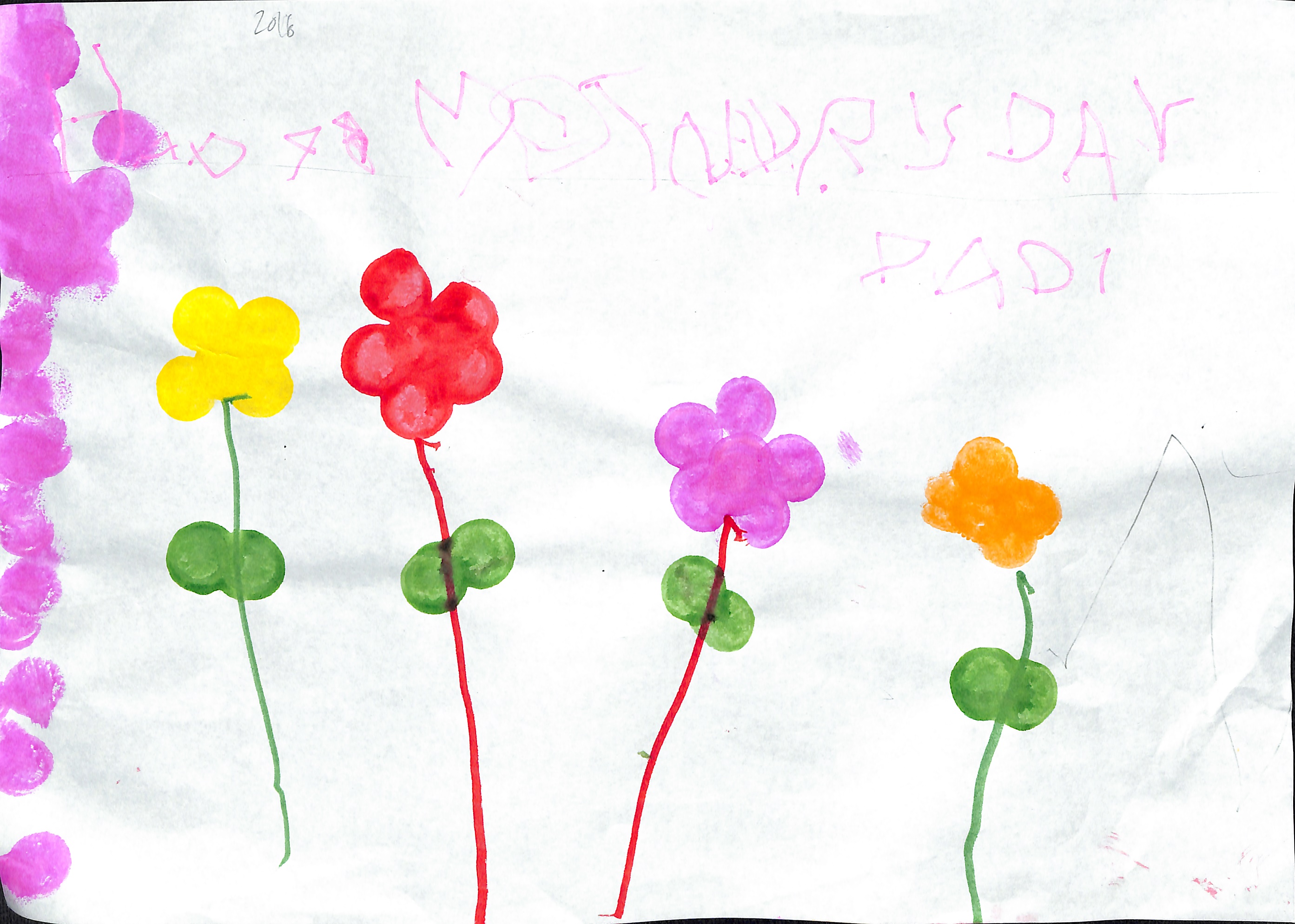

One thing you do not lack for when your child enters preschool is artwork. They generate prodigious amounts of it, with gusto, and they are often large format pieces on 16×24″ paper (roughly ISO A2). The question is, what do you do with the torrent?

I decided I would scan them, then file them in Ito-Ya Art Profolios, and possibly make annual photobooks for the grandparents. This brings up the logistical challenge of digitizing such large pieces. Most flatbed scanners are limited to 8.5×14″ (US Legal) format. Some like the Epson Expression 11000XL and 12000XL can scan 11×17″ (A3), as can the Fujitsu ScanSnap SV600 book scanner, but that is not fully adequate either. One option would be to fold the artwork up, scan portions then stitch them together in AutoPanoGiga or Photoshop, but that would be extremely cumbersome, specially when you have to do a couple per day. I do not have access to a color copier at my office, and most of these are only A3 anyway.

I purchased a Kaiser RS2 XA copy stand (cheaper to get it direct from Europe on eBay than from the usual suspects like B&H) and got a local framing shop to cut me a scrap of anti-reflective Museum Glass. This goes up to 16×20″ for the price of a midrange flatbed scanner, but it is tricky to set up lights so they don’t induce reflections (no AR coating is perfect), perfectly aligning the camera with the baseboard plane is difficult (I had to shim it using a cut-up credit card), and this still doesn’t solve the problem of the truly large 16×24″ artwork (stands able to handle larger formats are extremely expensive and very bulky).

I then started looking at large-format scanners like those made by Contex or Océ. They are used by architecture firms to scan blueprints and the like, but they are also extremely large, and cost $3000-5000 for entry-level models, along with onerous DRM-encumbered software that requires license dongles and more often than not will not run on a Mac. They are also quite bulky, specially if you get the optional stands.

That is why I was pleasantly surprised to learn British company Colortrac makes a model called the SmartLF Scan! (I will henceforth omit the over-the-top exclamation mark). It is self-contained (can scan to internal memory or a USB stick, although it will also work with a computer over USB or Ethernet, Windows-only, unfortunately), available in 24″ or 36″ wide versions, is very compact compared to its peers, and is even supplied with a nifty custom-fitted wheeled hard case. The price of $2,000 ($2,500 for the 36″ version), while steep for home use, is well within the range of enthusiast photo equipment. I sold a few unused cameras to release funds for one.

Once unpacked, the scanner is surprisingly light. It is quite wide, obviously, to be able to ingest a 24″ wide document (see the CD jewel case in the photo above for scale). There is a LCD control panel and a serviceable keypad-based (not touch) UI. The power supply is of the obnoxious wall-wart type. I wish they used text rather than inscrutable icons in the UI—it is much more informative and usable to see a menu entry for 400dpi resolution rather than checkerboard icons with various pitches.

After selecting your settings (or saving them as defaults), you load paper by feeding it from the front, face up. It is quite hard to feed large-format paper straight, and this is compounded by the lack of guides. On the other hand it is hard to see how Colortrac could have fitted photocopier-style guide rails in such a compact design, and they would be likely to break.

The scanner is simplex, not duplex, unsurprisingly at that price point. The sensor is on top of the feed, which helps control dust and debris sticking to it, but when scanning painted artwork, there will inevitably be crumbs of paint that will detach and stick to the sensor platen. This manifests itself as long dark vertical lines spoiling subsequent scans, something I occasionally also see on my Fujitsu ScanSnap document scanner. Cleaning the Colortrac is way easier than on the ScanSnap, as unfolding rear legs and releasing front catches opens it wide, and a few passes with optical cleaning wipes (I use Zeiss’ single-use ones) will do the trick.

By the manufacturer’s own admission, the scanner is designed to scan technical drawings, not art. It uses a linear contact image sensor (CIS) like lower-end flatbed scanners and document scanners, unlike the higher-fidelity charge-coupled device (CCD) sensors used in higher-end graphics arts and photo scanners. The light source is a row of point light LEDs that casts relatively harsh shadows on the paper. They do make CCD scanners for graphics arts, but they start at $10,000… Contex makes an A2 flatbed CCD scanner, the HD iFlex, but it costs $6,700 (at Jet.com of all places), their iQ Quattro 2490 at $4,500 is the most viable step-up (it uses a CIS, but offers 16-bit color, AdobeRGB and beyond gamut, calibration and magnetic paper guides).

The scanner’s resolution is 600dpi. Scanning 16×24″ originals at that resolution yields a 138MP file that is nearly a gigabyte in size. The 400dpi setting yields a much more reasonable 200MB or so, and compressing them further using tiffcp with zip compression (not an option on the scanner) yields 130-140MB files.

Unfortunately, I ended up returning it. There was a 1cm scratch in the glass platen, which manifested itself as streaks. It takes quite a bit to scratch glass (I don’t think it was Lexan or similar), and I wasn’t scanning sandpaper, so it must have been a factory defect or a customer return. When I looked at the color fidelity of the scans, I was not inclined to order a replacement, and got a Fujitsu ScanSnap SV600 from Japan instead from an Amazon third-party reseller (25% savings over the US price, even if you usually forgo a US warranty on grey-market imports).