A tale of three lenses

Introduction

This is a quick and semi-scientific comparison of three normal prime lenses for the Nikon system:

Why these three lenses? Simply because I happened to have them on hand. The 45mm is the lens I use on my FM3A (see also my Epinions.com review), the 60mm macro is the lens I bought along with a D100 for my father, and the 50mm f/1.8 (non-D) is the normal lens I used to have on my old N6006, before I sold it off on eBay (and gave the lens away to a friend who lent it back to me for the purposes of this test).

Construction

The 45mm and the 60mm are both very well constructed in metal, to the same standard as old AI-S lenses. The 50mm is the cheapest lens in Nikon’s line, and it shows: the barrel is plasticky (and the silk-screened focal length has actually partly worn off).

The 45mm and 60mm both have well-damped manual-focus rings without play. The 50mm has a loose focus ring. The 45mm and 50mm are both quite light, the 60mm is a more substantial and heavier lens (but I think it balances better with the D100 body).

The 45mm is a Tessar, a lens design invented exactly one century ago by Paul Rudolph of Zeiss. It is supplied with a compact lens hood and a neutral filter to protect the lens. Interestingly, the “real thing”, a Contax 45mm f/2.8 Tessar T* by Zeiss is actually cheaper than the Nikon copy…

The 60mm has a deeply recessed front element that comes out as you crank the helical for close-up and macro shots. The concentric inner barrels rearrange their relative position as well, due to the internal focus design which allows the lens to be used both as a macro and a general standard lens. For general photography, no lens hood is needed as the lens body itself acts as one.

Sharpness

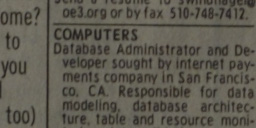

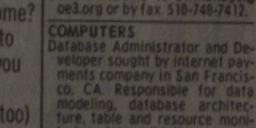

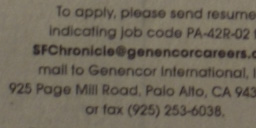

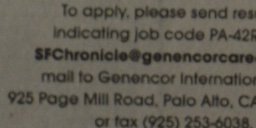

To test lens sharpness, I taped a page from the classified ads section of the Sunday paper to a wall, and lit it with a cheap Ikea halogen lamp. I then set up a Gitzo 2227 tripod with an Arca-Swiss B1 ballhead and shot the newspaper. I tried to use the same framing as much as possible to keep the shots comparable. Before running the tests, I applied a custom white balance using a standard 18% gray card. The shots were all taken with a 2s self-timer and the mirror vibration reduction function activated. The 50mm and 60mm were focused with AF, the 45mm was focused manually with the D100’s focus assist “electronic rangefinder”. The NEFs are “compressed NEFs”, around 4MB each.

| Lens | Aperture | JPEG | NEF |

|---|---|---|---|

| 45mm f/2.8P Nikkor | 2.8 | DSC_0072.jpg | DSC_0072.NEF |

| 4 | DSC_0071.jpg | DSC_0071.NEF | |

| 5.6 | DSC_0070.jpg | DSC_0070.NEF | |

| 8 | DSC_0069.jpg | DSC_0069.NEF | |

| 11 | DSC_0068.jpg | DSC_0068.NEF | |

| 16 | DSC_0067.jpg | DSC_0067.NEF | |

| 22 | DSC_0066.jpg | DSC_0066.NEF | |

| 50mm f/1.8 AF Nikkor | 1.8 | DSC_0074.jpg | DSC_0074.NEF |

| 2.8 | DSC_0073.jpg | DSC_0073.NEF | |

| 4 | DSC_0075.jpg | DSC_0075.NEF | |

| 5.6 | DSC_0076.jpg | DSC_0076.NEF | |

| 8 | DSC_0077.jpg | DSC_0077.NEF | |

| 11 | DSC_0078.jpg | DSC_0078.NEF | |

| 16 | DSC_0079.jpg | DSC_0079.NEF | |

| 22 | DSC_0080.jpg | DSC_0080.NEF | |

| 60mm f/2.8D AF Micro-Nikkor | 2.8 | DSC_0087.jpg | DSC_0087.NEF |

| 4 | DSC_0086.jpg | DSC_0086.NEF | |

| 8 | DSC_0085.jpg | DSC_0085.NEF | |

| 11 | DSC_0084.jpg | DSC_0084.NEF | |

| 16 | DSC_0083.jpg | DSC_0083.NEF | |

| 22 | DSC_0081.jpg | DSC_0081.NEF | |

| 32 | DSC_0082.jpg | DSC_0082.NEF |

You have to take this test with a grain of salt, as I had no way to make sure the camera axis was precisely perpendicular to the wall, whether the wall itself is flat and if the newspaper lay really flat against the wall. Furthermore, as I had to move the tripod to keep the framing identical across different focal lengths, I may have introduced subtle shifts in the tripod. To make this more usable, I am attaching below a table of 256×128 crops taken at the corners and center of the frame.

| Position | 60mm f/2.8D AF Micro-Nikkor | 50mm f/1.8 AF Nikkor | 45mm f/2.8P Nikkor |

|---|---|---|---|

| Top Left |  |  |  |

| Top Right |  |  |  |

| Center |  |  |  |

| Bottom Left |  |  |  |

| Bottom Right |  |  |  |

The differences between the lenses are subtle, and certainly within the error margin for the experiment, but all the lenses show excellent sharpness without fringe chromatic aberrations or the like.

Bokeh

Bokeh is the Japanese word to describe how out-of-focus highlights are rendered. It is principally controlled by the shape of the lens diaphragm. All three lenses have a seven-blade diaphragm, but only the 45mm’s blades are rounded.

| Lens | JPEG | NEF | Crop |

|---|---|---|---|

| 45mm f/2.8P Nikkor @ f/8 | DSC_0045.jpg | DSC_0045.NEF |  |

| 45mm f/2.8P Nikkor @ f/2.8 | DSC_0043.jpg | DSC_0043.NEF |  |

| 60mm f/2.8D AF Micro-Nikkor @ f/2.8 | DSC_0038.jpg | DSC_0038.NEF |  |

| 50mm f/1.8 AF Nikkor @ f/2.8 | DSC_0036.jpg | DSC_0036.NEF |  |

| 50mm f/1.8 AF Nikkor @ f/1.8 | DSC_0037.jpg | DSC_0037.NEF |  |

At the largest aperture, the aperture is usually constrained by a circular portion of the lens barrel rather than by the diaphragm, which explains why the bokeh of the 50mm lens is significantly better at f/1.8 than f/2.8. The next table shows the incidence of aperture on the shape of out-of-focus highlights:

| Aperture | 60mm f/2.8D AF Micro-Nikkor | 50mm f/1.8 AF Nikkor | 45mm f/2.8P Nikkor |

|---|---|---|---|

| f/1.8 |  | ||

| f/2.8 |  |  | |

| f/3.3 |  | ||

| f/4 |  |  |  |

| f/5.6 |  |  |  |

| f/8 |  |  |  |

The 50mm’s out-of-focus highlights have a hard heptagonal shape beyond f/2.8, as do the 60mm beyond f/4. The 45mm clearly benefits from its rounded diaphragm blades.

Flare control

Not tested yet.

Barrel distortion

Not tested yet.

Real-world image tests

| Click on any thumbnail to enlarge. ISO200 unless stated otherwise | ||||||||

|---|---|---|---|---|---|---|---|---|

| 45mm f/2.8P | f/2.8 1/4000s NEF | f/2.8 1/90s NEF | f/8 1/60s NEF | f/2.8 1/500s NEF | ||||

| 60mm f/2.8 AF Macro | f/2.8 1/4000s NEF | f/2.8 1/80s NEF | ISO400 f/4 1/60s NEF | |||||

| 50mm f/1.8 AF | f/2.8 1/4000s NEF | f/2.8 1/100s NEF | f/1.8 1/320s NEF | |||||

Summary

| Criterion | 60mm f/2.8D AF Micro-Nikkor | 50mm f/1.8 AF Nikkor | 45mm f/2.8P Nikkor |

|---|---|---|---|

| Price | Reasonable | Cheap | Expensive |

| Build quality | Excellent | Mediocre | Excellent |

| Sharpness | Excellent | Excellent | Excellent |

| Bokeh | Good | Fair | Excellent |

Conclusion

The 45mm f/2.8P Nikkor is clearly an excellent lens, and not just a retro head-turner. Unfortunately, it is rather expensive for what you get compared to the 60mm f/2.8D AF Micro-Nikkor (no AF, no macro capability). The 50mm f/1.8 AF Nikkor has less pleasant out-of-focus highlights than the other two, but it is remarkably sharp and one quarter the price. If the construction is an issue, the famous 50mm f/1.4D AF Nikkor (not tested as I don’t have one on hand) is also an excellent choice.

In any case, any of these lenses is an excellent performer that brings the best out of a Nikon camera, and no photographer’s camera bag should be without one.

Learning more

Here are a few good sites to learn more about Nikkor lenses:

Bjørn Rørslett is a nature photographer with reviews on quite a few Nikkor lenses.

Thom Hogan has a lot of instructive material on the Nikon system, including lens reviews.

Ken Rockwell has many opinionated reviews on Nikkor lenses (sometimes entertaining, sometimes infuriating). How he has the chutzpah to review lenses he has never held in his hands continues to elude me.

For French readers, Dominique Cesari has his take on Nikkor lenses.

The Nikon historical society in Japan has an interesting series, 1001 nights of Nikkor, with the back story on lens design.

Stephen Gandy’s CameraQuest website has reviews on some exotic Nikkor lenses on his Classic Camera Profiles page.