Automating Epson SSL/TLS certificate renewal

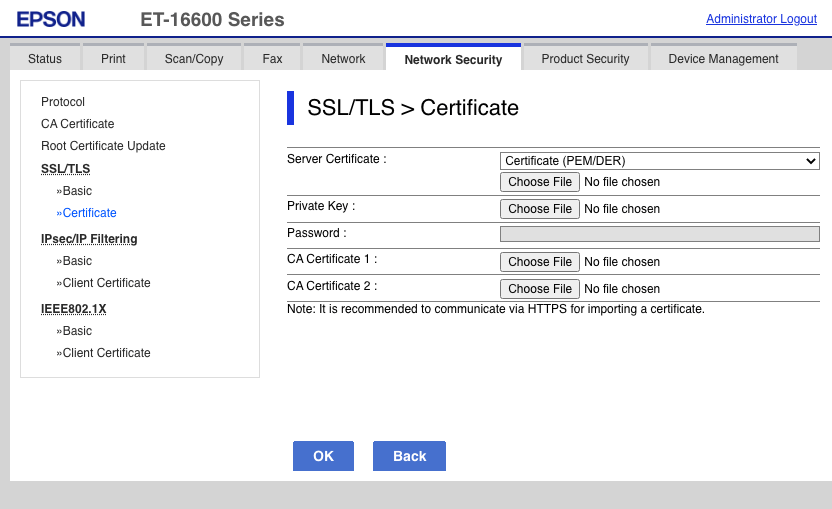

Network-capable Epson printers like my new ET-16600 have a web-based user interface that supports HTTPS. You can even upload publicly recognized certificates from Let’s Encrypt et al, unfortunately the only options they offer is a Windows management app (blech) or a manual form.

When you have to upload this every month (that’s when I automatically renew my Let’s Encrypt certificates), this gets old really fast, and strange errors happen if you forget to do so and end up with an expired certificate.

I wrote a quick Python script to automate this (and yes, I am aware of the XKCDs on the subject of runaway automation):

#!/usr/bin/env python3

import requests, html5lib, io

URL = 'https://myepson.example.com/'

USERNAME = 'majid'

PASSWORD = 'your-admin-UI-password-here'

KEYFILE = '/home/majid/web/acme-tiny/epson.key'

CERTFILE = '/home/majid/web/acme-tiny/epson.crt'

########################################################################

# step 1, authenticate

jar = requests.cookies.RequestsCookieJar()

set_url = URL + 'PRESENTATION/ADVANCED/PASSWORD/SET'

r = requests.post(set_url, cookies=jar,

data={

'INPUTT_USERNAME': USERNAME,

'access': 'https',

'INPUTT_PASSWORD': PASSWORD,

'INPUTT_ACCSESSMETHOD': 0,

'INPUTT_DUMMY': ''

})

assert r.status_code == 200

jar = r.cookies

########################################################################

# step 2, get the cert update form iframe and its token

form_url = URL + 'PRESENTATION/ADVANCED/NWS_CERT_SSLTLS/CA_IMPORT'

r = requests.get(form_url, cookies=jar)

tree = html5lib.parse(r.text, namespaceHTMLElements=False)

data = dict([(f.attrib['name'], f.attrib['value']) for f in

tree.findall('.//input')])

assert 'INPUTT_SETUPTOKEN' in data

# step 3, upload key and certs

data['format'] = 'pem_der'

del data['cert0']

del data['cert1']

del data['cert2']

del data['key']

upload_url = URL + 'PRESENTATIONEX/CERT/IMPORT_CHAIN'

########################################################################

# Epson doesn't seem to like bundled certificates,

# so split it into its componens

f = open(CERTFILE, 'r')

full = f.readlines()

f.close()

certno = 0

certs = dict()

for line in full:

if not line.strip(): continue

certs[certno] = certs.get(certno, '') + line

if 'END CERTIFICATE' in line:

certno = certno + 1

files = {

'key': open(KEYFILE, 'rb'),

}

for certno in certs:

assert certno < 3

files[f'cert{certno}'] = io.BytesIO(certs[certno].encode('utf-8'))

########################################################################

# step 3, submit the new cert

r = requests.post(upload_url, cookies=jar,

files=files,

data=data)

########################################################################

# step 4, verify the printer accepted the cert and is shutting down

if not 'Shutting down' in r.text:

print(r.text)

assert 'Shutting down' in r.text

print('Epson certificate successfully uploaded to printer.')

Update (2020-12-29):

If you are having problems with the Scan to Email feature, with the singularly unhelpful message “Check your network or WiFi connection”, it may be the Epson does not recognize the new Let’s Encrypt R3 CA certificate. You can address this by importing it in the Web UI, under the “Network Security” tab, then “CA Certificate” menu item on the left. The errors I was seeing in my postfix logs were:

Dec 29 13:30:20 zulfiqar mail.info postfix/smtpd[13361]: connect from epson.majid.org[10.0.4.33]

Dec 29 13:30:20 zulfiqar mail.info postfix/smtpd[13361]: SSL_accept error from epson.majid.org[10.0.4.33]: -1

Dec 29 13:30:20 zulfiqar mail.warn postfix/smtpd[13361]: warning: TLS library problem: error:14094418:SSL routines:ssl3_read_bytes:tlsv1 alert unknown ca:ssl/record/rec_layer_s3.c:1543:SSL alert number 48:

Dec 29 13:30:20 zulfiqar mail.info postfix/smtpd[13361]: lost connection after STARTTLS from epson.majid.org[10.0.4.33]

Dec 29 13:30:20 zulfiqar mail.info postfix/smtpd[13361]: disconnect from epson.majid.org[10.0.4.33] ehlo=1 starttls=0/1 commands=1/2

Update (2021-08-01):

The script was broken due to changes in Let’s Encrypt’s trust path. Seemingly Epson’s software doesn’t like certificates incorporating 3 PEM files and shows the singularly unhelpful error “Invalid File”. I modified the script to split the certificate into its component parts. You may also need to upload the root certificates via the “CA Certificate” link above. I added these and also updated the built-in root certificates to version 02.03 and it seems to work:

lets-encrypt-r3-cross-signed.pem40:01:75:04:83:14:a4:c8:21:8c:84:a9:0c:16:cd:dfisrgrootx1.pem82:10:cf:b0:d2:40:e3:59:44:63:e0:bb:63:82:8b:00lets-encrypt-r3.pem91:2b:08:4a:cf:0c:18:a7:53:f6:d6:2e:25:a7:5f:5a

They are available from the Let’s Encrypt certificates page.