Funding the vetting of the Software Supply-Chain

TL:DR A way out of our software supply-chain security mess

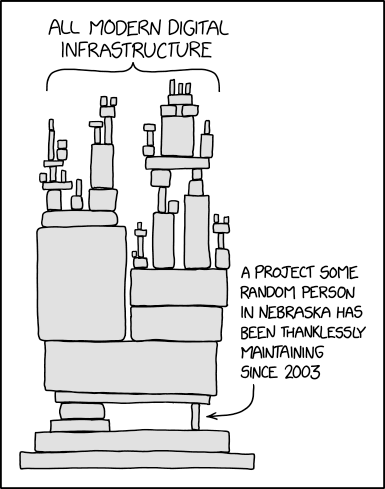

As memorably illustrated by XKCD, the way most software is built today is by bolting together reusable software packages (dependencies) with a thin layer of app-specific integration code that glues it all together. Others have described more eloquently than I can the mess we are in, and the technical issues.

Crises like the log4j fiasco or the Solarwinds debacle are forcing the community to wake up to something security experts have been warning about for decades: this culture of promiscuous and undiscriminating code reuse is unsustainable. On the other hand, for most software developers without the resources of a Google or Apple behind them, being able to leverage third-parties for 80% of their code is too big an advantage to abandon.

This is fundamentally an economic problem:

- To secure a software project to commercial standards (i.e. not the standards required for software that operates a nuclear power plant or the NSA’s classified systems, or that requires validation by formal methods like TLA+), some form of vetting and code reviews of each software dependency (and its own dependencies, and the transitive closure thereof) needs to happen.

- Those code reviews are necessary, difficult, boring, labor-intensive, require expertise and somebody needs to pay for that hard work.

- We cannot rely entirely on charitable contributions like Google’s Project Zero or volunteer efforts.

- Each version of a dependency needs to be reviewed. Just because version 11 of foo is secure doesn’t mean a bug or backdoor wasn’t introduced in version 12. On the other hand, reviewing changes takes less effort than the initial review.

- It makes no sense for every project that consumes a dependency to conduct its own duplicative independent code review.

- Securing software is a public good, but there is a free-rider problem.

- Because security is involved, there will be bad actors trying to actively subvert the system, and any solution needs to be robust to this.

- This is too important to allow a private company to monopolize.

- It is not just the Software Bill of Materials that needs to be vetted, but also the process. Solarwinds was probably breached because state-sponsored hackers compromised their Continuous Integration infrastructure, and there is Ken Thompson’s classic paper on the risks of Trusting Trust (original ACM article as a PDF).

- Trust depends on the consumer and the context. I may trust Google on security, but I certainly don’t on privacy.

I believe the solution will come out of insurance, because that is the way modern societies handle diffuse risks. Cybersecurity insurance suffers from the same adverse-selection risk that health insurance does, which is why premiums are rising and coverage shrinking.

If insurers require companies to provide evidence that their software is reasonably secure, that creates a market-based mechanism to fund the vetting. This is how product safety is handled in the real world, with independent organizations like Underwriters Laboratories or the German TÜVs emerging to provide testing services.

Governments can ditch their current hand-wavy and unfocused efforts and push for the emergence these solutions, notably by long-overdue legislation on software liability, and at a minimum use their purchasing power to make them table stakes for government contracts (without penalizing open-source solutions, of course).

What we need is, at a minimum:

- Standards that will allow organizations like UL or individuals like Tavis Ormandy to make attestations about specific versions of dependencies.

- These attestations need to have licensing terms associated with them, so the hard work is compensated. Possibly something like copyright or Creative Commons so open-source projects can use them for free but commercial enterprises have to pay.

- Providers of trust metrics to assess review providers. Ideally this would be integrated with SBOM standards like CycloneDX, SPDX or SWID.

- A marketplace that allows consumers of dependencies to request audits of a version that isn’t already covered.

- A collusion-resistant way to ensure there are multiple independent reviews for critical components.

- Automated tools to perform code reviews at lower cost, possibly using Machine Learning heuristics, even if the general problem can be proven the be computationally untractable.