Some people use laptops as their primary computing environment. I am not one of them. Desktop replacement laptops like the MacBook Pro are heavy, and truly portable ones like my MacBook Air are too limited. Even the desktop replacement ones have limited expandability, slow drives, poor screens, lousy keyboards. My workhorse for the last 5 years was a dual 2GHz PowerMac G5. I am surprised I kept it so long, but I guess that says something about the durability of Macs and how you are not required to go on the hadware upgrade treadmill with each release of the OS. To paraphrase Borges, each increasingly bloated version of Windows makes you regret the previous one. I am also surprised at how much residual value the G5 has kept on eBay.

That said, the G5 was showing its age. Stitching panoramas made from 22MP Canon 5DmkII frames in AutoPano Pro is glacially slow, for instance. I was not willing to switch to the Mac Pro until today because the archaic shared bus on previous Intel chips is a severe bottleneck on multi-processor and multicore performance, unlike the switched interconnect used by PowerPC G5 and AMD Opteron processors, both of which claim descent from the DEC Alpha, the greatest CPU architecture ever designed. The new Xeon 3500 and 5500 Mac Pros use Intel’s new Nehalem microarchitecture, which finally does away with the shared bus in favor of a switched interconnect called QuickPath and on-chip memory controllers (i.e. Intel cribbed AMD’s Opteron innovations).

I splurged on the top of the line Mac Pro, with eight 2.93GHz cores, each capable of running two threads simultaneously, and 8GB of RAM. The standard hard drive options were completely lackluster, so I replaced the measly 660GB boot drive with an enterprise-class Intel X25-E SSD. Unfortunately, at 32GB it is just enough to host the OS and applications, so I complemented it with a quiet, power-efficient yet fast 1TB Samsung SpinPoint F1 drive (there is a WD 2TB drive, but it is a slow 5400rpm, and the 1.5TB Seagate drive has well-publicized reliability problems, even if Seagate did the honorable thing unlike IBM with its defective Deathstars).

I originally planned on using the build-to-order ATI Radeon HD 4870 video card upgrade, but found out the hard way it is incompatible with my HP LP3065 monitor (more below) and had to downgrade back to the nVidia GeForce GT 120. It would have been nice to use BootCamp for games and retire my gaming PC, but I guess that will have to wait. The GT120 is faster than the 8800GTS in the Windows box, in any case.

In no particular order, here are my first impressions:

- The “cheese grater” case is the same size as the G5, but feels lighter.

- The DVD-burner drive tray feels incredibly flimsy.

- Boot times are ridiculously fast. Once you’ve experienced SSDs as I originally did with the MacBook Air, there is no going back to spinning rust.

- I have plenty of Firewire 800 to 400 cables for my FW400 devices (Epson R1800, Nikon Super Coolscan 9000ED, Canon HV20 camcorder) so I will probably not miss the old ports and probably not even need a hub (Firewire 800 hubs are very hard to get).

- The inside of the case is a dream to work with. The drive brackets are easy to swap, the PCIe slots have a spring-loaded retention bar that hooks under the back of the card, and the L brackets are held with thumbscrews, making swapping the cards trivial, with no risk of getting a marginal connection from a poorly seated card.

- The drive mounting brackets have rubber grommets to dampen vibrations, a nice touch. There also seems to be some sort of contact sensor in the rear, purpose unknown.

- There are only two PCIe power connectors, so you can only plug in a single ATI 4870 card even though there are two PCIe x16 slots. The GT 120 does not require PCIe power connectors so you would have to expand capacity with one of these. Considering the GT 120 is barely more expensive than the Mini DisplayPort to Dual-Link DVI adapter cable, it makes more sense to get the extra video card if you have two monitors.

- The entire CPU and RAM assembly sits on a daughterboard that can be slid out. This will make upgrading RAM (when the modules stop being back-ordered at Crucial) a breeze.

- Built-in Bluetooth means no more flaky USB dongles.

- No extras. The G5 included OmniGraffle, OmniOutliner, Quickbooks, Comic Life and a bunch of other apps like Art Director’s Toolkit. No such frills on the Mac Pro even though it is significantly more expensive even in its base configuration.

- The optical out is now 96kHz 24-bit capable, unlike the G5 that was limited to 44kHz 16-bit Red Book audio. I have some lossless 192kHz studio master recordings from Linn Records, so I will have to get a USB DAC to get full use out of them. I am not sure why Apple cheaped out on the audio circuitry in a professional workstation that is going to be heavily used by musicians.

- The G5 was one of the first desktop machines to have a gigabit Ethernet port. Apple didn’t seize the opportunity to lead with 10G Ethernet.

- The annoying Mini DisplayPort is just as proprietary as ADC, but without the redeeming usability benefits of using a single cable for power, video and USB. DisplayPort makes sense for professional use with high-end monitors like the HP Dreamcolor LP2480zx that can actually use 10-bit DACs for ultra-accurate color workflows. There is no mini to regular DisplayPort adapter, unfortunately. Well, the thinness of the cable is a redeeming feature. Apple has always paid attention to using premium ultra-flexible cables everywhere from the power cord to Firewire.

- Transferring over 800GB of data from the old Mac is utterly tedious, even over Firewire 800 using Target Disk mode on the G5…

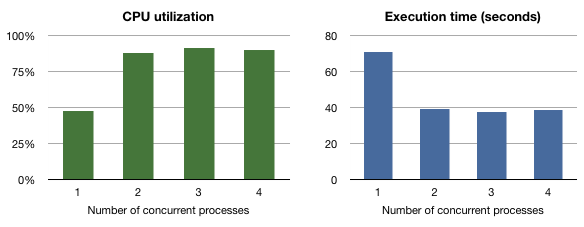

- As could be expected, the Mac Pro wipes the floor with the G5, as measured by Xbench. A more interesting comparison is with the MacBook Air, which also uses a SSD, albeit a slowish one.

Note (2009-03-17):

The BTO upgrade ATI Radeon HD 4870 video card I initially ordered won’t recognize my HP LP3065 30″ monitor, at least not on the Dual-link DVI port, which essentially renders it useless for me.

Update (2009-03-18):

I went to the Hillsdale Apple Store. The tech was very helpful, but we managed to verify that the ATI Radeon HD 4870 card works fine on an Apple 30″ Cinema Display (via Dual-link DVI) and on a 24″ Cinema LED display (via mini-DisplayPort). The problem is clearly an incompatibility between the ATI Radeon HD 4870 and the HP LP3065.

I am not planning on switching monitors. The HP is probably the best you can get under $3000, and far superior in gamut, ergonomics (tilt/height adjustments) and connectivity (3 Dual-link DVI ports) to the current long-in-the-tooth Apple equivalent, for 2/3 the price. My only option is to downgrade the video card to a nVidia GeForce GT 120. I ordered one from the speedy and reliable folks at B&H and should get it tomorrow (Apple has it back-ordered for a week).

Update (2009-03-19):

I swapped the ATI 4870 for the nVidia GT120. The new card works with the monitor. Whew!

Update (2009-03-29):

I have just learned disturbing news about racial discrimination at B&H. For the reasons I give on RFF, I can no longer recommend shopping there.